Introduction

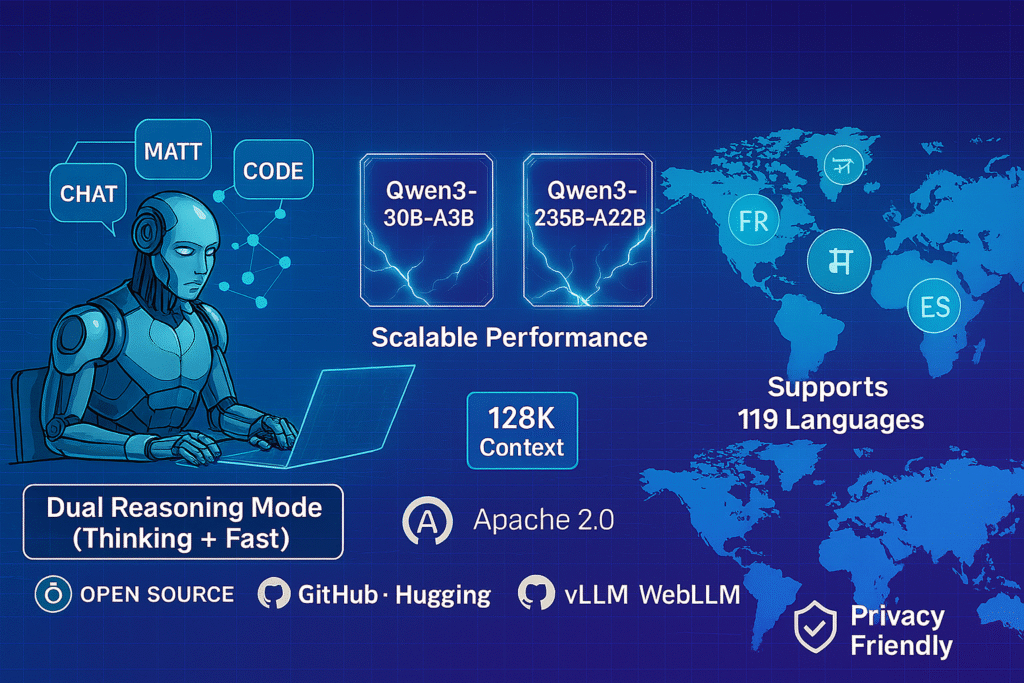

Alibaba Cloud has just raised the bar in a world that is rushing toward AI that is smarter, faster, and more responsible. Presenting Qwen3, the most recent development in the Qwen large language model family. Qwen3 is more than just a model; it is an entire AI ecosystem, built with state-of-the-art hybrid reasoning, multilingual fluency, and scalable open-source performance. Qwen3 offers cutting-edge performance with unparalleled efficiency and flexibility, whether you’re creating multilingual apps, coding copilots, intelligent chatbots, or knowledge agents. Qwen3 raises the bar for open, approachable, and potent AI with its dual-mode reasoning, 119 language support, and up to 235 billion parameters.

Here’s what makes Qwen3 stand out:

1. Dual Reasoning Modes (Hybrid Architecture)

Qwen3 is the First open-source LLM to introduce two distinct reasoning modes:

- Thinking Mode: Designed for tasks requiring deep, step-by-step reasoning — such as coding, math, and scientific analysis.

- Non-Thinking Mode: Fast and responsive for everyday chat, summarization, translation, and content generation.

2. Efficient Scale with MoE Models:

The Qwen3 lineup includes two powerful Mixture-of-Experts variants:

- Qwen3-30B-A3B (30B total, ~3B active)

- Qwen3-235B-A22B (235B total, ~22B active)

3. Fluent in 119 Languages:

Qwen3 expands from 29 to 119 supported languages and dialects, making it one of the most multilingual LLMs in the open-source space. Whether you’re working in English, Hindi, Swahili, French, or Mandarin, Qwen3 is trained to deliver natural, fluent output.

4. Open, Flexible, and Developer-Friendly:

All Qwen3 models are released under the Apache 2.0 license, with compatibility across:

- Hugging Face, GitHub, ModelScope, vLLM, MLX, llama.cpp, WebLLM, and more.

5. Feature Comparison:

| Feature | Qwen3 (Alibaba) | GPT-4 (OpenAI) | Claude 3 (Anthropic) | DeepSeek-V2 / InternLM2 |

|---|---|---|---|---|

| Reasoning Mode | Hybrid (thinking + fast) | Single-mode | Single-mode | Mostly fast-mode |

| Parameters (MoE) | Up to 235B (22B active) | 1.8T (closed) | 860B (closed) | 236B |

| Context Length | 128K | 128K (selective) | 200K | 128K |

| Licensing | Apache 2.0 (Open-source) | Closed-source | Closed-source | Mixed licenses |

| Deployment Support | Hugging Face, vLLM, etc. | OpenAI only | Anthropic API | Local + Cloud |

| Cost to Use | Free / Open-source | Paid tiers | Paid tiers | Mostly free |

What Makes Qwen3 Different

- Reasoning That Thinks Like a Human

Unlike reactive models that rely solely on shallow prompts, Qwen3’s Thinking Mode enables the model to approach tasks more like a human would—breaking down complex problems step-by-step. This is especially useful for math, programming, legal, and scientific work.

- Designed for Real-World Efficiency

By activating only a subset of parameters during inference, the MoE versions of Qwen3 reduce latency and computational overhead. It’s scalable and energy-efficient, without sacrificing quality.

- Privacy and Transparency

With fully open weights and training transparency, Qwen3 avoids the black-box limitations of closed AI. You can audit, fine-tune, and adapt the model as needed—making it a strong choice for enterprise and research environments.

The Open-Source AI Revolution

A new phase in the history of AI is represented by Qwen3. It’s not only about larger models; it’s also about more intelligent architectures, developer autonomy, and practical usability. Qwen3 is well-positioned to spearhead the next wave of generative AI platforms with its combination of deep reasoning, effective performance, and easily accessible licensing. Qwen3 gives you power and flexibility whether you’re experimenting with cutting-edge reasoning, fine-tuning for particular tasks, or implementing AI at scale.