Unveiling GPT-4o: A Comprehensive Guide to OpenAI’s Latest Multimodal Marvel

OpenAI’s latest release, GPT-4o, represents a significant advancement in the field of artificial intelligence, particularly in the realm of large multimodal models. Building on the capabilities of GPT-4 with Vision, GPT-4o is designed to offer a more integrated and seamless user experience, enhancing human-computer interaction in unprecedented ways. This guide will explore what GPT-4o is, how it differs from its predecessors, evaluate its performance, and discuss its potential use cases.

What is GPT-4o?

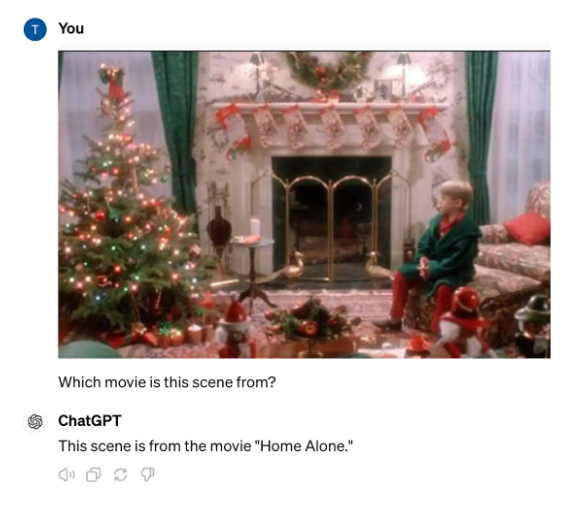

GPT-4o, where the “o” stands for “omni” (meaning ‘all’ or ‘universally’), was unveiled during a live-streamed announcement and demonstration on May 13, 2024. This multimodal model integrates text, visual, and audio input and output capabilities, enhancing the previous iteration of GPT-4 Turbo. The primary advantage of GPT-4o is its ability to handle multiple modalities within a single model, as opposed to previous versions that relied on multiple specialized models, such as separate models for voice-to-text, text-to-voice, and text-to-image conversions. This integration results in a more cohesive and efficient user experience.

Key Enhancements in GPT-4o

GPT-4o brings several notable improvements over its predecessors, making it a powerful tool for various applications:

- Speed and Cost Efficiency: GPT-4o is twice as fast as GPT-4 Turbo and 50% cheaper, with input tokens costing $5 per million and output tokens $15 per million. Additionally, it has a fivefold increase in rate limits, capable of handling up to 10 million tokens per minute.

- Extended Context Window: With a context window of 128K, GPT-4o can process and retain a significantly larger amount of information, enhancing its ability to maintain context over extended interactions.

- Multimodal Capabilities: GPT-4o supports text, visual, and audio input and output natively. This multimodal integration allows for more natural and fluid interactions, enabling the model to understand and generate content across various formats, including video.

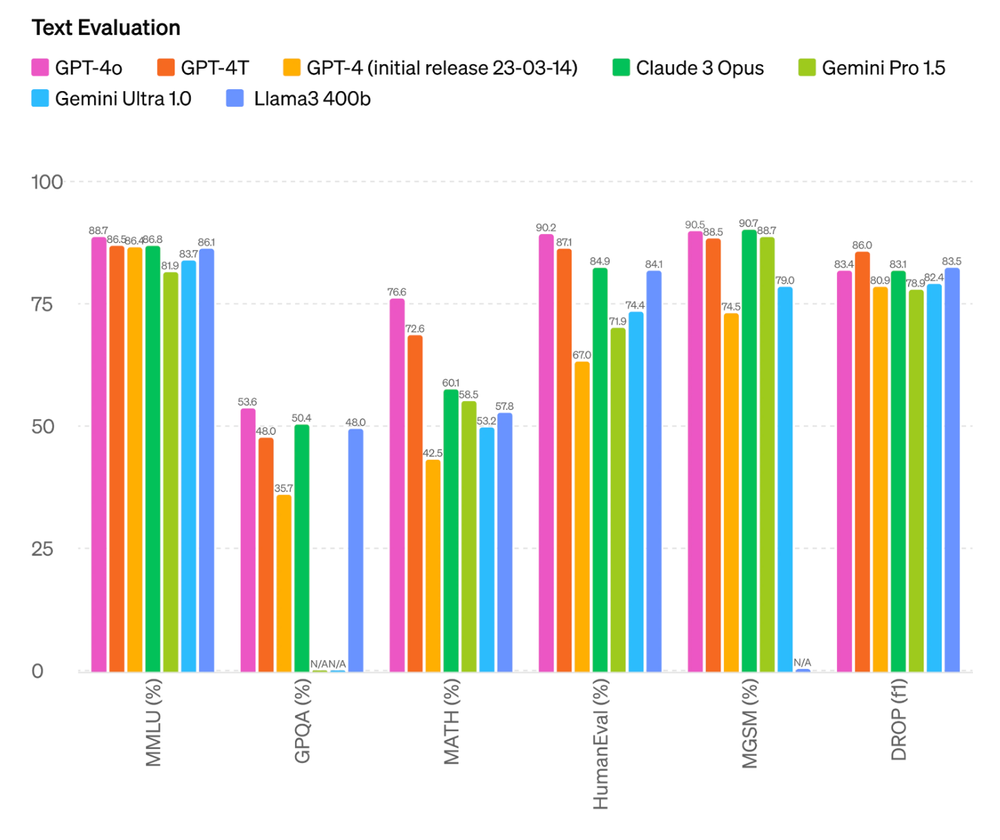

- Enhanced Performance: According to OpenAI, GPT-4o outperforms previous models and competitors like Anthropic’s Claude 3 Opus, Google’s Gemini, and Meta’s Llama3 in benchmarks. It also demonstrates superior speed and efficiency, particularly in tasks requiring rapid responses.

Performance Evaluation

Text Capabilities

GPT-4o exhibits slight improvements or comparable performance to other large multimodal models (LMMs) like previous GPT-4 versions and competitors. OpenAI’s benchmark results highlight its enhanced accuracy and fluency in text-based tasks, maintaining its position as a leading language model.

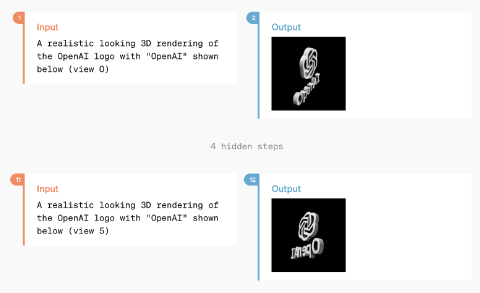

Video Capabilities

GPT-4o’s ability to understand and generate video content marks a significant advancement. The model can process videos by converting them into frames (2-4 frames per second) and generating short videos, including 3D model video reconstructions. This capability is particularly useful for applications requiring visual content generation and analysis.

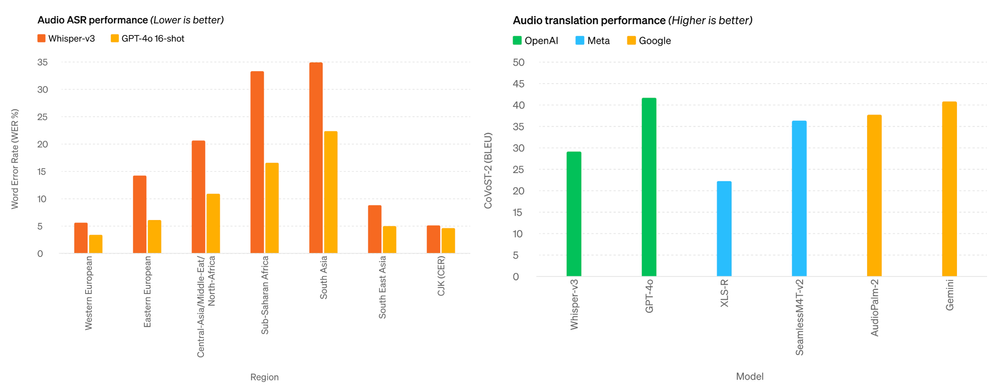

Audio Capabilities

GPT-4o can ingest and generate audio files with a high degree of control over the generated voice. It can alter tones, change speech speed, and even sing on demand. Additionally, it excels in automatic speech recognition (ASR) and outperforms previous state-of-the-art models like OpenAI’s Whisper-v3 and those from Meta and Google.

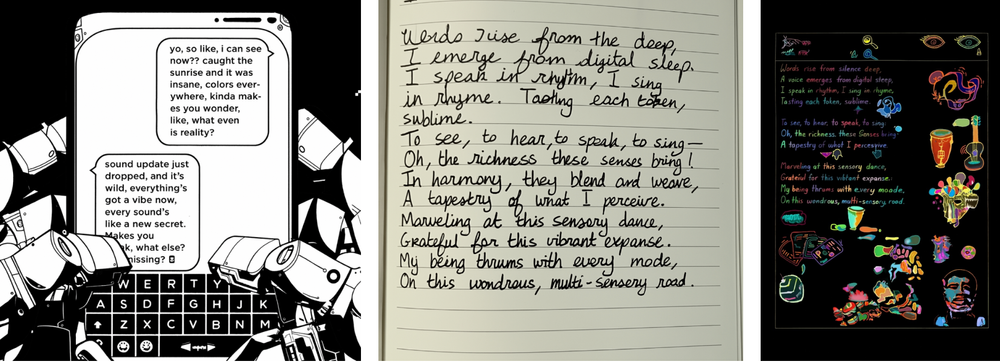

Image Generation

The image generation capabilities of GPT-4o are impressive, with demonstrations of one-shot reference-based image generation and accurate text depictions. It can create custom fonts and generate images based on specific prompts, showcasing its versatility in visual content creation.

Use Cases for GPT-4o

The advancements in GPT-4o open up a plethora of new applications across various fields:

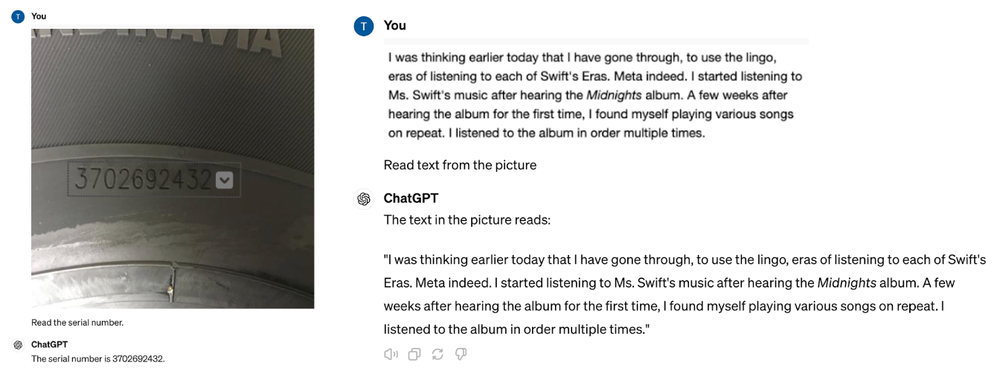

Real-time Computer Vision

The speed improvements and multimodal integration enable real-time computer vision applications. GPT-4o can quickly gather intelligence from the world around you, aiding in navigation, translation, guided instructions, and complex data understanding.

One-device Multimodal Applications

GPT-4o can run on desktop, mobile, and potentially wearable devices, creating a unified interface for troubleshooting and various tasks. Users can interact with the model through visual and audio inputs, reducing the need for switching between different models and screens.

Enterprise Applications

GPT-4o’s enhanced performance and multimodal capabilities make it suitable for enterprise applications, particularly those that do not require fine-tuning on custom data. It can be used in conjunction with open-source models to create comprehensive AI solutions for businesses.

Customer Support

GPT-4o’s ability to understand and respond accurately to customer inquiries makes it an excellent tool for customer support. It can handle a wide range of questions, provide detailed information, and escalate issues when necessary, improving customer satisfaction.

Content Creation

From writing articles and generating creative content to producing visual and audio media, GPT-4o’s advanced capabilities make it a valuable asset for content creators. It can assist in brainstorming, drafting, and optimizing content across multiple formats.

Education and Training

GPT-4o can enhance educational experiences by providing personalized learning, tutoring, and educational content creation. Its ability to answer complex questions and explain concepts clearly makes it an invaluable resource for students and educators.

Healthcare

In healthcare, GPT-4o can provide information on medical conditions, treatment options, and general health advice. It can be integrated into telemedicine platforms for preliminary consultations and triage, helping to streamline patient care.

Challenges and Future Directions

While GPT-4o represents a significant advancement, it is not without challenges:

Bias and Fairness

Despite efforts to reduce biases, GPT-4o can still exhibit unintended biases. Continuous research and development are necessary to further mitigate these biases and ensure fair and equitable interactions.

Understanding Nuance and Context

Although GPT-4o has improved in understanding context, there are still instances where it may misinterpret nuanced queries. Ongoing refinement is needed to enhance its comprehension abilities.

Ethical Considerations

As AI becomes more integrated into daily life, ethical considerations become increasingly important. Ensuring that GPT-4o is used responsibly and ethically, particularly in sensitive areas such as healthcare, is crucial.

Scalability and Integration

Integrating GPT-4o into existing systems and scaling its deployment across various platforms can present logistical challenges. Developing robust APIs and ensuring seamless integration are essential for maximizing its potential.

Conclusion

GPT-4o’s newest improvements, including its speed, cost efficiency, extended context window, and multimodal capabilities, make it a groundbreaking tool for AI applications. Its ability to handle text, visual, and audio inputs and outputs seamlessly creates a more natural and efficient human-computer interaction experience. As OpenAI continues to push the boundaries of AI technology, GPT-4o stands as a testament to the incredible potential of large multimodal models in transforming various industries and enhancing our interactions with technology.